About

Abstract

Although artificial intelligence holds promise for addressing societal challenges, issues of exactly which tasks to automate and the extent to do so remain understudied. We approach the problem of task delegability from a human-centered perspective by developing a framework on human perception of task delegation to artificial intelligence. We consider four high-level factors that can contribute to a delegation decision: motivation, difficulty, risk, and trust. To obtain an empirical understanding of human preferences in different tasks, we build a dataset of 100 tasks from academic papers, popular media portrayal of AI, and everyday life. For each task, we administer a survey to collect judgments of each factor and ask subjects to pick the extent to which they prefer AI involvement. We find little preference for full AI control and a strong preference for machine-in-the-loop designs, in which humans play the leading role. Our framework can effectively predict human preferences in the degree of AI assistance. Among the four factors, trust is the most predictive of human preferences of optimal human-machine delegation. In all, this work is a first step towards quantifying the delegability of traditionally-human tasks to AI. We hope to highlight this as an important research area for human-centered computing.

A Framework for Task Delegability

| # | Factors | Components |

|---|---|---|

| 1 | Motivation | Intrinsic motivation, goals, utility |

| 2 | Difficulty | Social skills, creativity, effort required, expertise required, human ability |

| 3 | Risk | Accountability, uncertainty, impact |

| 4 | Trust | Machine ability, interpretability, value alignment |

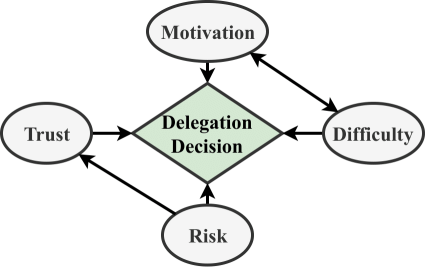

To explain human preferences of task delegation to AI, we develop a framework with four factors: a person’s motivation in undertaking the task, their perception of the task’s difficulty, their perception of the risk associated with accomplishing the task, and finally their trust in the AI agent.

We choose these factors because motivation, difficulty, and risk respectively cover why a person chooses to perform a task, the process of performing a task, and the outcome, while trust captures the interaction between the person and the AI.

Motivation

Motivation is an energizing factor that helps initiate, sustain, and regulate task-related actions by directing our attention towards goals or values. Affective (emotional) and cognitive processes are thought to be collectively responsible for driving action, so we consider intrinsic motivation and goals as two components in motivation. Specifically we distinguish between learning goals and performance goals, as indicated by Goal Setting Theory. Finally, the expected utility of a task captures its value from a rational cost-benefit analysis perspective. Note that a task may be of high intrinsic motivation yet low utility, e.g., reading a novel.

Difficulty

Difficulty is a subjective measure reflecting the cost of performing a task. For delegation, we frame difficulty as the interplay between task requirements and the ability of a person to meet those requirements. Some tasks are difficult because they are time-consuming or laborious; others, because of the required training or expertise. To differentiate the two, we include effort required and expertise required as components in difficulty. The third component, belief about abilities possessed, can also be thought of as task-specific self-confidence (also called self-efficacy) and has been empirically shown to predict allocation strategies between people and automation.

Additionally, we contextualize our difficulty measures with two specific skill requirements: the amount of creativity and social skills required. We choose these because they are considered more difficult for machines than for humans.

Risk

Real-world tasks involve uncertainty and risk in accomplishing the task, so a rational decision on delegation involves more than just cost and benefit. Delegation amounts to a bet: a choice considering the probabilities of accomplishing the goal against the risks and costs of each agent. Perkins et al. define risk practically as a “probability of harm or loss,” finding that people rely on automation less as the probability of mortality increases. Responsibility or accountability may play a role if delegation is seen as a way to share blame. We thus decompose risk into the three components of personal accountability for the task outcome; the uncertainty, or the probability of errors; and the scope of impact, or cost or magnitude of those errors.

Trust

Trust captures how people deal with risk or uncertainty. We use Lee and See’s definition of trust as “the attitude that an agent will help achieve an individual’s goals in a situation characterized by uncertainty and vulnerability." Trust is generally regarded as the most salient factor in reliance on automation. Here, we consider trust as a combination of perceived ability of the AI agent, agent interpretability (ability to explain itself), and perceived value alignment. Each of these corresponds to a component of trust in automation in Lee and See: performance, process, and purpose.

Limitations

We stress that this research is a first attempt at quantifying the delegability of different tasks. The four-factor framework we present here -- Trust, Difficulty, Ability, Motivation -- is by no means comprehensive or necessarily the correct approach. We also acknowledge limitations in the administration of our survey on Mechanical Turk as a data collection method, and of the implicit impact of our survey wording and choice of tasks on the results. Finally, we limited the survey respondants to United States residents; we expect a dependence on cultural customs and norms.

Nevertheless, we suggest that the direction is a valuable one. Quantification of which tasks people actually want delegated and why, alongside the amount and type of machine assistance for each task, would go a long way towards more efficient and responsible allocation of industry and academic research resources. Further, a better understanding of the factors behind what makes one task more delegable to AI than another would improve our ability to address AI shortcomings, improve usability, and generalize task-specific ML, HCI, or HRI results to other tasks in the task space.

Our hope is that this preliminary work can assist the community in developing more rigorous methods of supporting human-centered machine learning and automation.

About Us

We are a group of researchers at the University of Colorado, Boulder, interested in human-centered machine learning. This research project was led by Brian Lubars and Professor Chenhao Tan.